This post describes some approaches that Windows desktop app devs might consider for having a screen reader make a specific announcement. This includes leveraging the new UI Automation (UIA) Notification event introduced in the Windows 10 Fall Creators Update.

Introduction

By default, screen readers being used by your customers will convey information related to where your customers are currently working. For a screen reader to make announcements relating to other things going on in an app could introduce distractions that make working at the app very difficult. But periodically questions are raised about how desktop apps can take action which leads to a screen reader making a particular announcement, even though the UI where the screen reader's currently located doesn't convey the information related to the announcement.

So the first thing to consider in this situation, is whether it's really helpful to your customers to have the announcement made. There are certainly critically important scenarios where an announcement must be made, and silence is to be avoided. You should never hear your customers say "Did anything just happen?" when something important has just happened in your app. There are also scenarios where announcements can be an unwelcome distraction, and really don't add any value to your customer experience. For the discussion below, we'll assume that it is helpful to your customer for a particular announcement to be made.

Apologies up-front: When I uploaded this post to the blog site, the images did not get uploaded with the alt text that I'd set on them. So any images are followed by a title. Also, the code snippets below don't look anything like they would in a code editor, so they're pretty difficult to follow. I've not found a way of fixing that with the tools I have available.

Are you sure a particular UI design couldn't result in a helpful announcement being made by default?

Before considering how specific action on your app's part might lead to an announcement being made by a screen reader, it's worth considering whether a particular UI design could lead to the announcement being made by default, and which is a good experience for everyone. For example, say some background transaction is in process, and when some unexpected error occurs, today your app shows the text "Your really important transaction did not complete!" somewhere down near the bottom of your app's window. By default, Narrator probably won't announce that important text given that it's interacting with other UI in the app at the time the text appears, despite potentially time-sensitive action needing to be taken by your customer.

But say, instead of the text appearing at some corner of your app, a message box appeared which explained what's going on. Narrator would make your customer aware of the message box immediately, and your customers could access all the information in the message box at that time. What's more, could the use of a message box be a preferred approach for all customers? A customer using magnification would be made aware of the transaction result, where they might not have been if the magnified view didn't happen to include the text that previously appeared down at some lower corner of the app. In fact, the risk of any customers not noticing the time-sensitive information is reduced, regardless of whether they use assistive technology.

![]()

Figure 1: A message box showing important status information. A screen reader will announce the message box appearing.

Now, you may have considered the above, and still feel that the best interaction model does not include a message box. But the important thing is that you've considered it.

Avoid well-intentioned actions which might degrade the customer experience

Sometimes you find that you've considered all options available to your desktop app, and you feel there really isn't a practical path to deliver the experience you want for all your customers. But you're determined to deliver the best experience you can, and so start exploring what's technically possible.

This is where we need to be careful that your app doesn't do something because it's technically possible, rather than because it's good for your customers.

For example, say you look through the list of UI Automation (UIA) events that can be raised by your app, possibly through an interop call to a native function. You notice the UIA SystemAlert event, and find that your app can raise that event without too much work. What's more, Narrator reacts to the event, and so can make your customer aware of that time-sensitive information. But SystemAlert events are for events that are of a system-alert nature. It's going to be uncommon for an app to really be making an announcement that your customer feels is similar in nature to a system alert, and so that could be really confusing. So avoid raising a type of event which is not a good match for your app's scenario.

Another example of where specific action by your app could be problematic for your customers, relates to attempts to overload some control with information that's really not relevant to it. Say your customer invokes a "Buy" button. Your app begins the purchase transaction, which fails immediately, and you show text at the bottom of the app saying something like "No network connection, try again later". If Narrator is still interacting with the "Buy" button, you might be tempted to set that same status text on a UIA property on the button, perhaps using the UIA HelpText property. You try this out and find that Narrator notices the change in HelpText on the button, and so announces something like "Buy, button, No network connection, try again later". On the surface, that might seem helpful, but consider the following:

1. There's now duplication of information being conveyed by the app. Is it possible that in some scenarios a screen reader will make announcements that include this duplication?

2. The approach assumes the screen reader will still be located at a particular button at the time the HelpText property changed. Often there's no guarantee that this will be the case.

3. Having status information being conveyed like this relies that the information never becomes stale while still being accessible. Your customer would never want to reach the button and hear a status that no longer applies.

If state information is conveyed visually on a button, then it's essential that that information is also conveyed through UIA. But I'd not recommend trying to manipulate a control's UIA representation in order to trigger some announcement which is really not a natural part of the control itself.

Can a desktop app take a leaf out of the web's playbook?

While this post is focused on the experience at desktop apps, similar discussions apply for web UI around what actions result in a screen reader announcing a change in the UI. For web UI, in some cases screen readers might make announcements due to a web page reloading, or perhaps the page might use LiveRegions, and another approach used with web UI is to move keyboard focus over to text which needs to be announced. But when considering that last approach, it's important that by default, static text shouldn't be inserted into the app's tab order. Whenever your customer tabs or shift+tabs around your app's UI, they expect to reach interactable controls. If they reach static text, they'll wonder why they've been taken there. So by default, don't include static text in the tab order.

And yeah, I know I've said that before in other posts. But given that devs still insert static text into the tab order because they're under the impression that that's required in order for a screen reader to reach the text, I do think it's worth me saying it again:

A screen reader can access static text labels without the labels being in the tab order.

And this is where web UI can achieve the desired results through specific use of the "tabindex" attribute. A web page can make text focusable, and set focus to it in order for a screen reader to announce it, without inserting the text into the tab order. If you were to follow a similar approach in a desktop app, you'd want to deliver the same experience. That is, the text would have keyboard focus set on it programmatically by your app when the announcement is to be made, but the text element is still not in the tab order.

As far as I know, this approach is not applicable to WinForms apps, as Labels are not designed to get keyboard focus at all, regardless of whether the Label is inserted into the tab order.

But WPF app devs may be somewhat interested in this approach in specific circumstances. If you want Narrator to announce the text shown on a TextBlock, all you need to do is set the TextBlock's IsFocusable to true, and call its Focus(). And hey presto, the text on the TextBlock will be spoken. But taking that action leaves the TextBlock in the tab order, and as such, as your customer later tabs around your app, they keep reaching the TextBlock for no apparent reason. (I wouldn't really want to get involved with trying to set the TextBlock's IsFocusable back to being false later in the hope of delivering a smooth experience.) So by default, I do feel this approach can be problematic, and if another more straightforward approach is available, I'd not go with messing with a WPF TextBlock's IsFocusable.

So why am I even mentioning the WPF TextBlock IsFocusable thing? Well, I do know of one app that's taken this approach. The app's state would transition such that it briefly presented no focusable controls, and instead presented a static text string conveying the app's state. I forget what the text was, but let's assume it was something like "Please wait…". The app wanted the "Please wait…" string to be announced, and so it made the associated TextBlock focusable, and set focus on it. The fact that the TextBlock was then in the tab order had no negative impact because there was nothing else to tab to. Once the brief waiting period was over, the TextBlock was collapsed, and keyboard focus set on a newly-visible interactable control. The requirements that the app had around what versions of the .NET framework and Windows were available to the app, limited its options around having the text string announced, so this approach seemed the most attractive.

So if this really does seem to have potential to be helpful to your customers, consider the (unlocalized) demo code below. First we have a Button and a collapsed status TextBlock:

<Button x:Name="BuyButton" Width="300" Click="Button_Click">Buy</Button>

<TextBlock x:Name="BuyStatus" HorizontalAlignment="Center" Visibility="Collapsed">Please wait...</TextBlock>

In the Button's Click handler, we set keyboard focus to the TextBlock, and disable the Button.

BuyStatus.Visibility = Visibility.Visible;

BuyStatus.Focusable = true;

BuyStatus.Focus();

BuyButton.IsEnabled = false;

If I tab to the Button and invoke it with a press of the Space key, this is Narrator's announcement:

Buy, button,

Space

Please wait...,

Once the brief waiting period is over, we'd collapse the status text and re-enable whatever other controls are appropriate.

So what about LiveRegions?

Ok, so LiveRegions can be very helpful, but whenever anyone mentions them, people say that LiveRegion's are over-used or inappropriately used, and generally aren't used in ways that are helpful to your customers. So let's just assume that you've considered whether it's appropriate to use a LiveRegion in your scenario, and you've come to the conclusion that a LiveRegion would be a great way to help your customers.

The next consideration is whether it's practical for your desktop app to leverage a LiveRegion. The answer to that pretty much breaks down as follows:

UWP XAML

Natively supported through the AutomationProperties.LiveSetting property and LiveRegionChanged event.

WPF

Support for LiveRegions introduced with accessibility improvements in .NET 4.7.1, through AutomationProperties.LiveSetting property and LiveRegionChanged event.

WinForms

Not natively supported, but you can effectively turn a Label into a LiveRegion yourself, by following the steps described at Let your customers know of important status changes in your WinForms app.

Win32

Supported, but it requires knowledge of the detailed steps described at How to have important changes in your Win32 UI announced by Narrator.

Introducing the new UiaRaiseNotificationEvent

There's a common use of LiveRegions in desktop apps today which is contributing to the sentiment that LiveRegions are being used inappropriately. That is, LiveRegions are being used to trigger an announcement by Narrator, when the announcement has no related visuals on the screen. A LiveRegion is intended to mark some area on the screen as being "live", and so when that area changes, a screen reader can examine the updated area, and make a related announcement based on the content of the updated area. If some text has changed in the area, or an image or icon conveying status has changed, then the screen reader can announce that change.

However, some desktop apps wanted to trigger an important announcement by the screen reader, even when there was no such text, image, icon or any other specific element shown on the screen which provided the text associated with the announcement. In that case, the app might still create some UI and mark it as being a LiveRegion, and then take steps for the UI to have no visual representation on the screen. If this is done in a particular way, then it may indeed be possible for it to trigger a screen reader announcement. But it also may leave that element accessible to the screen reader later, still conveying the text associated with the earlier announcement. That can lead to a confusing or misleading experience, and so if this approach of a "hidden" LiveRegion is taken, it must be taken with great care.

And now, thanks to the introduction of UiaRaiseNotificationEvent() in the Windows 10 Fall Creators Update, you may have an additional option.

The UIA Notification event may provide a way for your app to raise a UIA event which leads to Narrator simply making an announcement based on text you supply with the event. In some scenarios, this could be a straightforward way to dramatically improve the accessibility of your app.

Important: If the UiaRaiseNotificationEvent() functionality is available to your app, it's more important than ever to consider the balance between not making your customers aware of important information, and providing so much information that it becomes an unhelpful distraction. If your app starts calling UiaRaiseNotificationEvent() too often, then your customers will soon find the experience irritating. So if you do use this helpful new functionality, use with care.

The question of whether it's practical for your desktop app to leverage UiaRaiseNotificationEvent() will depend on what type of app you've built. I expect most Win32 apps won't be leveraging it, because it requires your app to have an IRawElementProviderSimple available, and typically that won't be available unless you've implemented it yourself. If you have already done that, perhaps because you needed to make some custom UI accessible, then great. Just pass in your IRawElementProviderSimple to UiaRaiseNotificationEvent() and away you go. But many Win32 apps won't have done that, and as far as I know, it's not possible to access one provided by the UI framework through a standard Win32 control. If that's the case, then unless you're prepared to create an IRawElementProviderSimple just to pass into UiaRaiseNotificationEvent(), (which I doubt), you'll probably want to consider one of the other approaches described above, (such as using a LiveRegion).

Below are some thoughts on how other types of desktop apps can leverage the new UiaRaiseNotificationEvent().

UWP XAML

Well, when building a UWP XAML app for Windows 10 Build 16299 or later, this is easy. All your app needs to do is get some AutomationPeer for a UI element, and call its RaiseNotificationEvent(). At the time I write this, I've not found guidance on best practices around what you pass into RaiseNotificationEvent(), but it does seem you have a lot of control over specifying how you'd like Narrator to react.

Important: Historically, in some scenarios it could be impractical for you to deliver the experience you were striving for, when Narrator receives multiple UIA events from an app around the same time. For example, depending on the order that say a FocusChanged event and a LiveRegionChanged event arrived at Narrator, one announcement might be interrupted by the other announcement before the first announcement had even started. In practice, sometimes there was nothing you could do to improve the experience. The new UiaRaiseNotificationEvent() gives you much more control over specifying how you'd like a screen reader to react to the event, through the AutomationNotificationProcessing value that you supply. This is a really, really exciting development!

Another interesting point here is the event doesn't have to be raised off a TextBlock. If there is a TextBlock that is closely related to the announcement to be made, then it would seem logical to use the TextBlockAutomationPeer associated with the TextBlock with the call to RaiseNotificationEvent(). But if there is no related visible text element, another type of control could be used.

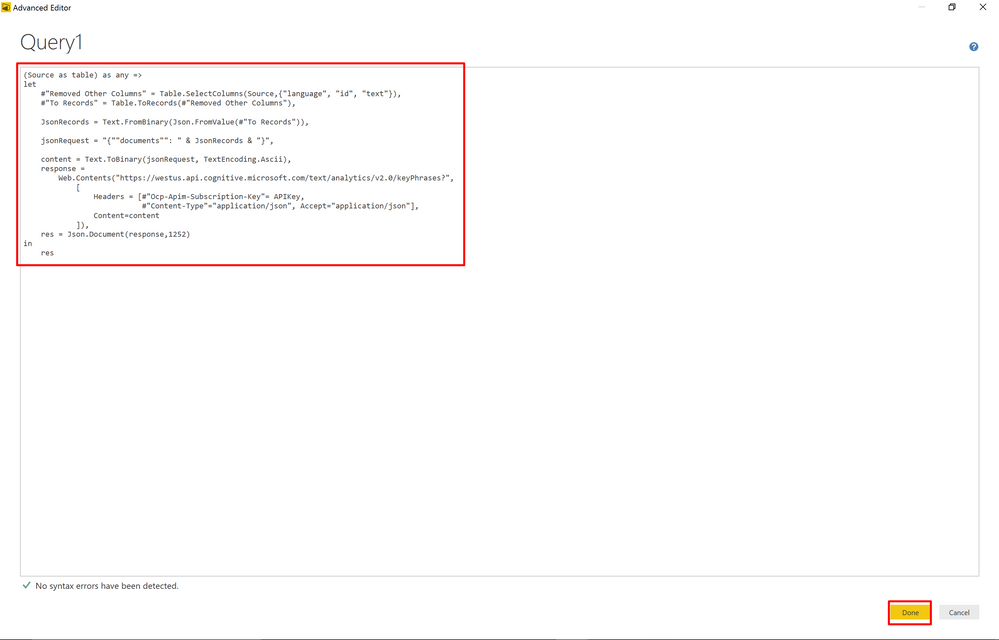

The code below shows a Button control being used to raise a notification event.

ButtonAutomationPeer peer = (ButtonAutomationPeer)

FrameworkElementAutomationPeer.FromElement(BuyButton);

if (peer != null)

{

// Todo: Replace these demo values with something appropriate to your scenarios.

peer.RaiseNotificationEvent(

AutomationNotificationKind.ActionAborted,

AutomationNotificationProcessing.ImportantMostRecent,

"Attempt to buy something did not complete, due to network connection being lost.",

"270FA098-C644-40A2-A0BE-A9BEA1222A1E");

}

Whenever I'm interested in learning about what UIA events are being raised by an app, I always point the AccEvent SDK tool at the app, before trying out Narrator. If I pointed Narrator to the app first, and it didn't make the announcement I expect, then I can't be sure that my app actually raised an event that Narrator could react to. But AccEvent can make this clear to me, and the latest version of AccEvent will report details about a UIA event being raised in response to a call to the AutomationPeer's RaiseNotificationEvent().

The following string contains details reported by AccEvent in response to the event being raised by the demo code above. The highlighted text of "Windows.UI.Xaml.dll" in the UIA ProviderDescription property shows that the event was raised through the UWP XAML framework.

UIA:NotificationEvent [NotificationKind_ActionCompleted, NotificationProcessing_ImportantMostRecent, displayString:" Attempt to buy something did not complete, due to network connection being lost.", activityId:"270FA098-C644-40A2-A0BE-A9BEA1222A1E"] Sender: ControlType:UIA_ButtonControlTypeId (0xC350), ProviderDescription:"[pid:3728,providerId:0x0 Main(parent link):Unidentified Provider (unmanaged:Windows.UI.Xaml.dll)]"

Having verified that an event is raised, I can then point Narrator to the app, and verify that Narrator makes a related announcement as expected. The following is the announcement made by Narrator as I invoke a button in a demo app which raises the UIA Notification event using the snippet shown above. Note that the event-related part of the announcement doesn't include any details of the element raising the event. Rather it is exactly the notification string supplied to RaiseNotificationEvent().

Raise UIA notification event, button, Alt, R, Alt+ R,

Space

Attempt to buy something did not complete, due to network connection being lost.

WPF

At the time of writing this, WPF does not natively support the new UIA Notification event. It is however, relatively straightforward for a C# app to access the functionality through interop.

A side note on LiveRegions: For WPF apps that can't leverage the new support for LiveRegions introduced in .NET 4.7.1, I wouldn't recommend trying to add support for LiveRegions yourself through interop. While raising a LiveRegionChanged event yourself through interop is straightforward, you would also need to expose a specific LiveSetting property through the element too, and that's not at all straightforward. But given that all an app leveraging the new UIA Notification event needs to do is raise the event, this is relatively straightforward through interop, and worth considering given the value that it brings to your app.

Interop code

Ok, so first add the code required to access the native UiaRaiseNotificationEvent() function.

internal class NativeMethods

{

public enum AutomationNotificationKind

{

ItemAdded = 0,

ItemRemoved = 1,

ActionCompleted = 2,

ActionAborted = 3,

Other = 4

}

public enum AutomationNotificationProcessing

{

ImportantAll = 0,

ImportantMostRecent = 1,

All = 2,

MostRecent = 3,

CurrentThenMostRecent = 4

}

// Add a reference to UIAutomationProvider.

[DllImport("UIAutomationCore.dll", CharSet = CharSet.Unicode)]

public static extern int UiaRaiseNotificationEvent(

IRawElementProviderSimple provider,

AutomationNotificationKind notificationKind,

AutomationNotificationProcessing notificationProcessing,

string notificationText,

string notificationGuid);

[DllImport("UIAutomationCore.dll")]

public static extern bool UiaClientsAreListening();

}

Add classes which will be used to raise the Notification event

In order for UiaRaiseNotificationEvent() to be called, you need a IRawElementProviderSimple to be available. You can get this from an AutomationPeer associated with some existing element in your app. The demo code below gets the IRawElementProviderSimple from a TextBlockAutomationPeer associated with a TextBlock. In order to do this, you'd need to create a new class derived from the TextBlock.

So create a new class called NotificationTextBlock, and add a public RaiseNotificationEvent() to that, which goes on to call the associated peer's RaiseNotificationEvent().

Important: If the code below is running on a version of Windows prior to the Windows 10 Fall Creators Update, then UiaRaiseNotificationEvent() will not be found, and unless this is accounted for, the app would crash. As such, the attempt to call UiaRaiseNotificationEvent() is wrapped in a try/catch, and if the attempt to call UiaRaiseNotificationEvent() fails due to it not being found, we won't try to call it again.

internal class NotificationTextBlock : TextBlock

{

// This control's AutomationPeer is the object that actually raises the UIA Notification event.

private NotificationTextBlockAutomationPeer _peer;

// Assume the UIA Notification event is available until we learn otherwise.

// If we learn that the UIA Notification event is not available, no instance

// of the NotificationTextBlock should attempt to raise it.

static private bool _notificationEventAvailable = true;

public bool NotificationEventAvailable

{

get

{

return _notificationEventAvailable;

}

set

{

_notificationEventAvailable = value;

}

}

protected override AutomationPeer OnCreateAutomationPeer()

{

this._peer = new NotificationTextBlockAutomationPeer(this);

return this._peer;

}

public void RaiseNotificationEvent(string notificationText, string notificationGuid)

{

// Only attempt to raise the event if we already have an AutomationPeer.

if (this._peer != null)

{

this._peer.RaiseNotificationEvent(notificationText, notificationGuid);

}

}

}

internal class NotificationTextBlockAutomationPeer : TextBlockAutomationPeer

{

private NotificationTextBlock _notificationTextBlock;

// The UIA Notification event requires the IRawElementProviderSimple

// associated with this AutomationPeer.

private IRawElementProviderSimple _reps;

public NotificationTextBlockAutomationPeer(NotificationTextBlock owner) : base(owner)

{

this._notificationTextBlock = owner;

}

public void RaiseNotificationEvent(string notificationText, string notificationGuid)

{

// If we already know that the UIA Notification event is not available, do not

// attempt to raise it.

if (this._notificationTextBlock.NotificationEventAvailable)

{

// If no UIA clients are listening for events, don't bother raising one.

if (NativeMethods.UiaClientsAreListening())

{

// Get the IRawElementProviderSimple for this AutomationPeer if we don't

// have it already.

if (this._reps == null)

{

AutomationPeer peer = FrameworkElementAutomationPeer.FromElement(this._notificationTextBlock);

if (peer != null)

{

this._reps = ProviderFromPeer(peer);

}

}

if (this._reps != null)

{

try

{

// Todo: The NotificationKind and NotificationProcessing values shown here

// are sample values for this snippet. You should use whatever values are

// appropriate for your scenarios.

NativeMethods.UiaRaiseNotificationEvent(

this._reps,

NativeMethods.AutomationNotificationKind.ActionCompleted,

NativeMethods.AutomationNotificationProcessing.ImportantMostRecent,

notificationText,

notificationGuid);

}

catch (EntryPointNotFoundException)

{

// The UIA Notification event is not not available, so don't attempt

// to raise it again.

_notificationTextBlock.NotificationEventAvailable = false;

}

}

}

}

}

}

Raise an event

Now that we have the NotificationTextBlock available, we can add it to the app's XAML, and at the appropriate place in the code-behind raise the event.

<!-- Note: This can be the source of the event without any text being set on it. -->

<local:NotificationTextBlock x:Name="StatusTextBlock" Width="300" />

// Raise a UIA Notification event.

StatusTextBlock.RaiseNotificationEvent(

"Attempt to buy something did not complete, due to network connection being lost.",

"B1980BCF-014D-4A47-9AB2-F23635B6F7FE"); // Todo: Replace this demo guid.

With the above code in place in my WPF app, I can point the AccEvent SDK tool at the app and verify that the UIA Notification event is being raised as expected. The following string is reported by AccEvent in response to the event being raised. The highlighted text of "PresentationCore" in the UIA ProviderDescription property shows that the event was raised through the WPF framework.

UIA:NotificationEvent [NotificationKind_ActionCompleted, NotificationProcessing_ImportantMostRecent, displayString:"Attempt to buy something did not complete, due to network connection being lost.", activityId:"B1980BCF-014D-4A47-9AB2-F23635B6F7FE"] Sender: ControlType:UIA_TextControlTypeId (0xC364), ProviderDescription:"[pid:18948,providerId:0x0 Main(parent link):Unidentified Provider (managed:MS.Internal.Automation.ElementProxy, PresentationCore, Version=4.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35)]"

WinForms

For a WinForms app to leverage the new UIA Notification event, things do get rather more involved. While a WPF app can get hold of a IRawElementProviderSimple provided by the WPF framework without too much effort, a WinForms app must create one itself. While this might be more work than is practical in some cases, I found one approach where I feel the work involved to be straightforward enough to be justified given the potential benefits to your customers.

Important: As with a WPF app, the approach described below will not work on versions of Windows prior to the Windows 10 Fall Creators Update, and so involves a try/catch to protect against an exception.

Interop code

First add the interop code required by the WinForms app.

public class NativeMethods

{

public const int WM_GETOBJECT = 0x003D;

public const int UiaRootObjectId = -25;

public const int UIA_ControlTypePropertyId = 30003;

public const int UIA_AccessKeyPropertyId = 30007;

public const int UIA_IsKeyboardFocusablePropertyId = 30009;

public const int UIA_IsPasswordPropertyId = 30019;

public const int UIA_IsOffscreenPropertyId = 30022;

public const int UIA_TextControlTypeId = 50020;

public enum AutomationNotificationKind

{

ItemAdded = 0,

ItemRemoved = 1,

ActionCompleted = 2,

ActionAborted = 3,

Other = 4

}

public enum AutomationNotificationProcessing

{

ImportantAll = 0,

ImportantMostRecent = 1,

All = 2,

MostRecent = 3,

CurrentThenMostRecent = 4

}

// Add a reference to UIAutomationProvider.

[DllImport("UIAutomationCore.dll", EntryPoint = "UiaRaiseNotificationEvent", CharSet = CharSet.Unicode)]

public static extern int UiaRaiseNotificationEvent(

IRawElementProviderSimple provider,

AutomationNotificationKind notificationKind,

AutomationNotificationProcessing notificationProcessing,

string notificationText,

string notificationGuid);

[DllImport("UIAutomationCore.dll")]

public static extern bool UiaClientsAreListening();

}

Add a class which will be used to raise the Notification event

Given that no existing IRawElementProviderSimple can be supplied by the UI framework to the app, the code below adds support for it.

Important: The implementation of IRawElementProviderSimple here is the minimum required, such that the UIA representation of a new NotificationLabel object is as close as possible to that of a standard Label control. The goal is to expose through UIA, an object which is effectively a standard Label control, but which can also raise the new UIA Notification event. As part of developing the NotificationLabel, I repeatedly pointed the Inspect SDK tool to both the NotificationLabel and to a standard Label, and updated the NotificationLabel's IRawElementProviderSimple implementation until the UIA representation of both classes were as similar as possible.

// Add support for the UIA IRawElementProviderSimple interface to a standard WinForms Label control.

public class NotificationLabel : Label, IRawElementProviderSimple

{

// Assume the UIA Notification event is available until we learn otherwise.

// If we learn that the UIA Notification event is not available, no instance

// of the NotificationLabel should attempt to raise it.

static private bool _notificationEventAvailable = true;

static public bool NotificationEventAvailable

{

get

{

return _notificationEventAvailable;

}

set

{

_notificationEventAvailable = value;

}

}

// Override WndProc to provide our own IRawElementProviderSimple provider when queried by UIA.

protected override void WndProc(ref Message m)

{

// Is UIA asking for a IRawElementProviderSimple provider?

if ((m.Msg == NativeMethods.WM_GETOBJECT) && (m.LParam == (IntPtr)NativeMethods.UiaRootObjectId))

{

// Return our custom implementation of IRawElementProviderSimple.

m.Result = AutomationInteropProvider.ReturnRawElementProvider(

Handle,

m.WParam,

m.LParam,

(IRawElementProviderSimple)this);

return;

}

base.WndProc(ref m);

}

// IRawElementProviderSimple implementation.

ProviderOptions IRawElementProviderSimple.ProviderOptions

{

get

{

// Assume the UIA provider is always running in the server process.

return ProviderOptions.ServerSideProvider | ProviderOptions.UseComThreading;

}

}

IRawElementProviderSimple IRawElementProviderSimple.HostRawElementProvider

{

get

{

return AutomationInteropProvider.HostProviderFromHandle(this.Handle);

}

}

public object GetPatternProvider(int patternId)

{

// The standard WinForms Label control only supports the IsLegacyIAccessible pattern,

// and this custom control gets that for free.

return null;

}

public object GetPropertyValue(int propertyId)

{

// All properties returned here are done so in order to replicate the

// UIA representation of the standard WinForms Label control.

// Note that the only difference between the UIA properties of the NotificationLabel and the

// standard Label is the ProviderDescription. The standard Label's property will include:

// "Microsoft: MSAA Proxy (unmanaged:uiautomationcore.dll)",

// whereas the NotificationLabel's will include something related to the app's implementation

// of IRawElementProviderSimple.

switch (propertyId)

{

case NativeMethods.UIA_ControlTypePropertyId:

{

return NativeMethods.UIA_TextControlTypeId;

}

case NativeMethods.UIA_AccessKeyPropertyId:

{

// This assumes the control has no access key. If it does have an access key,

// look for an '&' in the control's text, and return a string of the form

// "Alt+<the access key character>".

return "";

}

case NativeMethods.UIA_IsKeyboardFocusablePropertyId:

{

return false;

}

case NativeMethods.UIA_IsPasswordPropertyId:

{

return false;

}

case NativeMethods.UIA_IsOffscreenPropertyId:

{

// Assume the control is always visible on the screen.

return false;

}

default:

{

return null;

}

}

}

}

Raise an event

Now that the NotificationLabel is available, it can be inserted into the app's UI, and the required event raised through code-behind.

When the UI is created…

this.labelStatus = new <Your namespace>.NotificationLabel();

When the event is to be raised later…

// If we already know that the UIA Notification event is not available, do not attempt to raise it.

if (NotificationLabel.NotificationEventAvailable)

{

// If no UIA clients are listening for events, don't bother raising one.

if (NativeMethods.UiaClientsAreListening())

{

// Todo: Replace all these demo values passed into UiaRaiseNotificationEvent()

// with whatever works best for your scenario.

string notificationString = "Attempt to buy something did not complete, due to network connection being lost.";

string guidStringDemo = "4F3A7213-6AF5-42D3-8DDD-C50AB83AE782";

try

{

NativeMethods.UiaRaiseNotificationEvent(

(IRawElementProviderSimple)labelStatus,

NativeMethods.AutomationNotificationKind.ActionCompleted,

NativeMethods.AutomationNotificationProcessing.All,

notificationString,

guidStringDemo);

}

catch (EntryPointNotFoundException)

{

// The UIA Notification event is not available, so don't attempt

// to raise it again.

NotificationLabel.NotificationEventAvailable = false;

}

}

}

Note: The Notification raised with the above code results in Narrator making an associated announcement, even when the NotificationLabel has a Visible property of false. With a Visible property of false, the element has no visual representation and is not exposed through the UIA tree.

With the above code in place in my WinForms app, I can point the AccEvent SDK tool at the app and verify that the UIA Notification event is being raised as expected. The following string contains details reported by AccEvent in response to the event being raised by the demo code above. The highlighted text of "NotificationLabel" in the UIA ProviderDescription property shows that the event was raised through the NotificationLabel class defined above.

UIA:NotificationEvent [NotificationKind_ActionCompleted, NotificationProcessing_All, displayString:"Attempt to buy something did not complete, due to network connection being lost.", activityId:"4F3A7213-6AF5-42D3-8DDD-C50AB83AE782"] Sender: ControlType:UIA_TextControlTypeId (0xC364), ProviderDescription:"[pid:15796,providerId:0x10085E Main:Nested [pid:8732,providerId:0x10085E Main(parent link):Unidentified Provider (managed:UiaRaiseNotificationEvent_WinForms.NotificationLabel, UiaRaiseNotificationEvent_WinForms, Version=1.0.0.0, Culture=neutral, PublicKeyToken=null)]; Hwnd(parent link):Microsoft: HWND Proxy (unmanaged:uiautomationcore.dll)]"

Summary

In some scenarios, the question of whether your app makes your customers aware of certain information, can be critically important to your customers. So once you're convinced that your customers would benefit from some announcement which isn't being made by default, please do consider options around how it might be practical to have that announcement made given your situation. Exactly what is practical will depend on the type of your desktop app, and the versions of Windows or the .NET framework that are available to you. But you'd not want to miss an opportunity to help as many of your customers as possible, and now, the UIA Notification event might be an important new option for you.

Guy